oms升级

备份数据库

sudo docker stop ${CONTAINER_NAME}

1 | sudo docker stop oms_4.2.8 |

-

登录配置文件中的 CM 心跳库,删除数据库中部分无用记录,节省备份时间。

1

2

3

4

5

6登录配置文件中的 CM 心跳库

mysql -h172.20.85.200 -P3306 -uscm -p -D_cm_hb

删除无用的记录

heatbeat_sequence 用于汇报心跳,获取自增 ID

delete from heatbeat_sequence where id < (select max(id) from heatbeat_sequence); -

执行下述命令,手工备份 rm、cm 和 cm_hb 库为 SQL 文件,并确认各文件的大小不为 0。

多地域场景下,各地域的 cm_hb 库均需要备份。例如有两个地域,则需要备份 4 个库:rm、cm、cm_hb1 和 cm_hb2。

1

2

3

4mysqldump -h172.20.85.200 -P3306 -uscm -p --triggers=false _cm_hb > /home/admin/_cm_hb.sql

mysqldump -h172.20.85.200 -P3306 -uscm -p --triggers=false _cm > /home/admin/_cm.sql

mysqldump -h172.20.85.200 -P3306 -uscm -p --triggers=false _cm_hb_pbx > /home/admin/_cm_hb_pbx.sql

mysqldump -h172.20.85.200 -P3306 -uscm -p --triggers=false _rm > /home/admin/_rm.sql

加载下载的 OMS 社区版安装包至 Docker 容器的本地镜像仓库

1 | docker load -i oms_4.2.9-ce.tar.gz |

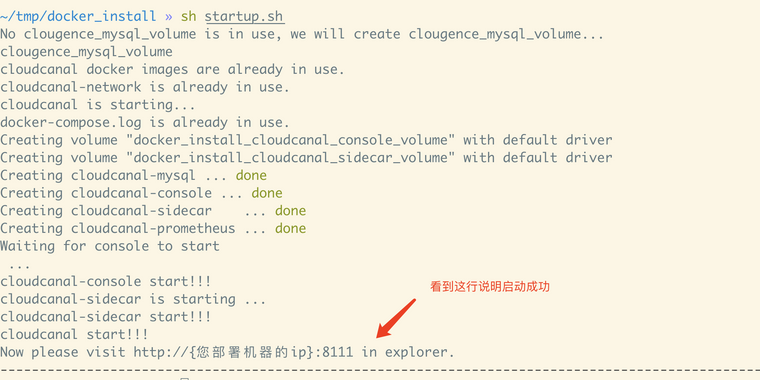

启动 OMS 社区版 V4.2.9-CE 新容器

1 | docker run -dit --net host \ |

当同一地域下多个节点的配置文件(主要是 cm_nodes)相同时,一个地域只需要执行一次 docker_init.sh